How to Integrate Siri With Your App Thanks to Apple SiriKit

By YML

At Apple’s WWDC conference, one of the biggest announcements that made headlines everywhere was “Apple opens Siri API’s for developers”. And everyone’s excited.

I think you’ll agree with me when I say that digital experts are dying to jump at the opportunity to leverage Siri to create a more integrated user experience, one that can benefit both users and companies alike.

In this article we’ll dive deep into how Siri works, the fundamentals of implementing Siri, and how to design a great user experience with Apple’s own personal assistant.

You can jump to any section of the article from the links here below:

How Siri works: Learn the basics of Siri to implement SiriKit into your app in the right way

How Siri interacts with a messaging app: Learn the logic behind the scenes so that you can replicate it for your own messaging app

How to implement Siri: Let’s explore how Siri is actually integrated into iOS

Bringing it back to SiriKit: Let’s go more in depth into SiriKit and discover INExtension

Visual user interface: Configure a custom user interface with the help of the Intents UI Framework

Designing a great user experience: An introduction to conversational interface design.

How Siri Works

To gain an understanding of how to implement SiriKit into your app, it’s important to understand how Siri works.

As we all know, once you launch Siri, you can simply ask a question by speaking into your iPhone. Siri will turn the audio from what you say into a text sentence that shows up on your phone screen. Siri then uses natural language processing to interpret the text, and converts it into a structured representation of what the user is trying to do.

Siri sees the world as ‘domains’. A domain is a broad category of things that Siri understands, and knows how to talk about. So far, Apple has only enabled six domains that a developer can leverage:

So before you go and start thinking about all the different potential use cases for your app, keep in mind that Apple has only opened support for these six domains above.

If you can link your app to one of these six different domains — great! You can start thinking about how to leverage the SiriKit to your advantage. But if your app’s purpose falls outside of these service types, I’m afraid you’ll have to wait until Apple expands the list of domains before you can do anything meaningful with Siri.

Within each of these six domains, there are a number of different ‘intents’. An intent describes a known action that Siri understands and can communicate to your application. Some examples of intents would be something like “Start a Skype video call with Ben” or “Start a Skype voice call with Ben”. Each of these phrases falls into the audio or video calling domain.

Siri supports a number of different intents.

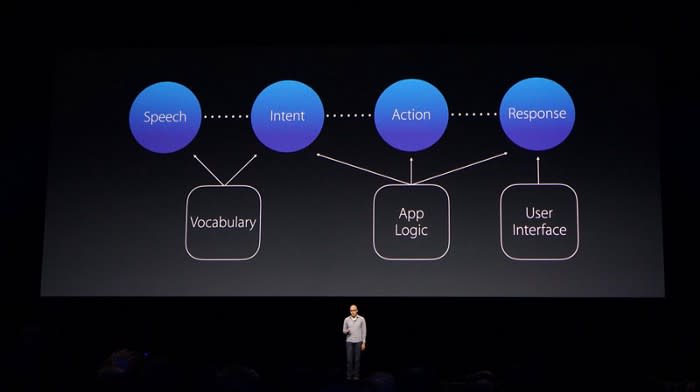

While processing an intent, Siri goes through a few different steps. The first step is what Apple calls the ‘vocabulary step’. The vocabulary step comes into play in the time between when Siri recognizes speech from the user and creates the intent for your application to handle. Siri has a vocabulary database that it uses to understand commands and map them to intents. Your application provides vocabulary words to add to Siri’s database, so that Siri can map commands to your custom intents. Giving Siri the right vocabulary is one of the most critical pieces to get right when exposing your application to Siri (we’ll cover that in the design section later on).

How Siri Interacts With a Messaging App

At the WWDC conference, we all saw video clips or images of how Siri can summon a messaging app without the user having to tap on the app’s icon. The question now becomes: how is this implemented, so that I could do the same if I own a messaging app?

Let’s assume that I speak the following phrase to Siri: “Hey Siri, send Ashish a WhatsApp message.”

Siri recognizes a few things right off the bat.

First, she recognizes that the user has referenced the WhatsApp application name.

When a user has allowed Siri privileges to interact with the WhatsApp application, the application name gets exposed to the Siri vocabulary by default. If you are implementing Siri in your app, the name that users will use to summon your application, is the same as the name that is listed below your app on the home screen.

The nice part is that Siri is able to pick up on an application name even if it is used in a variety of different contexts or parts of speech. For instance, it is very common to hear an app name used as a verb. In our example, Siri would’ve also recognized “Hey Siri, WhatsApp a message to Ashish”. No additional work is needed to add in this support.

The next thing that Siri recognizes is that this request falls in the ‘messages’ domain, and has the ‘send message’ intent.

The first role that WhatsApp will play in this case is to help Siri understand the speech. Ashish is not a name in the default Siri vocabulary database, but it is a name in the WhatsApp database. The name gets added to the database by the developers at WhatsApp sharing the contact details for Ashish from WhatsApp to Siri. In other domains you can provide vocabulary for different intents, such as the name of a photo album (for intents in the photo searching domain) or the name of a workout (for intents in the workout domain).

After that, Siri will do is check if there is any missing information in the given intent.

For instance, in this example Siri recognizes that in order to send a message in the messaging domain, you actually need to have content for the message. Intents from other domains will have different parameters that need to be filled in. For example, a money transfer app may need to know the currency for the transfer, or the amount of the transfer. This step is known as the ‘resolve’ step, where Siri will attempt to resolve any conflicts it has with completing the intent. To resolve our example of a message having no message content, Siri would simply ask the user what message they would like to send to Ashish. Siri has a couple of ways to resolve issues, which I cover below in “Design Guidelines”.

After this step is taken care of, Siri wraps up all of this data into a nice simple intent object that your application can make sense of.

It then passes that intent object to your application for you to perform the logic on, and waits for a response to confirm the action with the user.

How to Implement Siri

Now that we know how Siri works, and that our application falls into one of the six eligible Siri domains, it’s time to explore how Siri is actually integrated into iOS.

App Extensions:

The framework that Apple has provided to enable Siri is called — SiriKit. To understand how SiriKit works, we first have to understand Apple’s concept of ‘extensions’.

An app extension lets you extend custom functionality and content beyond your app and make it available to users while they’re interacting with other apps or the iOS system. A great example is a keyboard extension that runs when a user writes a text message and selects a custom keyboard from the different keyboard options.

The benefit of app extensions is that your users are able to interact with your application’s functionality even when the user is using a separate app, and your app is only open in the background.

App extensions are delivered to users by being packaged in a ‘containing app’, and are installed when the user downloads the containing app from the app store.

Examples of extensions:

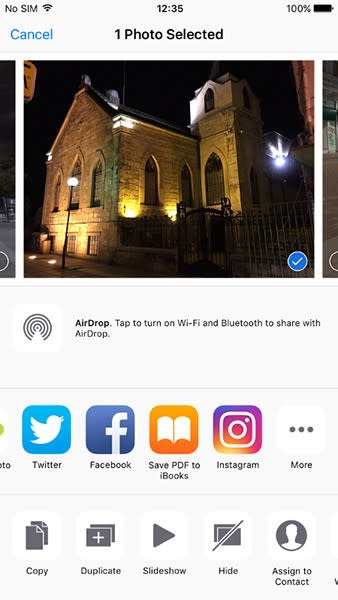

To be able to fully understand how Siri works, we need to have an in-depth understanding of how extensions work. To clarify how extensions work, let’s look at the Instagram sharing extension:

In the screenshot above, a user is browsing the native ‘photos’ app that comes pre-installed on the iPhone, and presses the ‘share’ icon. In this case, the ‘photos’ app would be considered the ‘host app’. When a user taps the Instagram extension from the list of sharing options, the operating system launches the Instagram extension.

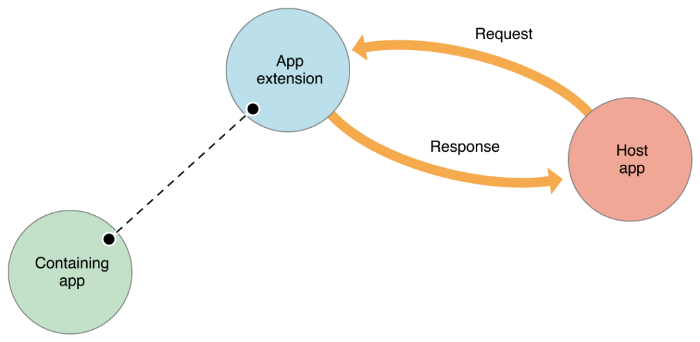

The mechanism for communication between the host app and the app extension is a request/response action. Simply put, the host app sends a request to the app extension, the extension receives the request, processes it, and then returns the response to the host app.

The figure above is taken from the Apple Developer documents, and represents the interaction between host apps and extensions. The ‘containing app’ would be Instagram in our example.

The dotted line represents the limited communication between the containing app and the App extension. There is no direct communication between the containing app and the app extension. Typically, the containing app isn’t even running when the extension is running.

Bringing it Back to SiriKit

SiriKit integration is implemented as an extension in this same way, with a new class in iOS 10 called INExtension (Intents Extension), also known as the Intents Framework.

Let’s take a look at another example of how a Siri extension would work.

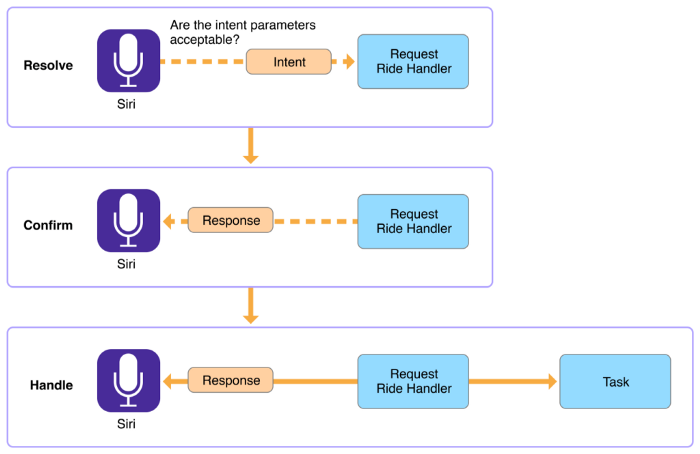

This time let’s look at how Uber would implement a Siri extension in the ridesharing domain. The developers of Uber would build and deploy an extension that comes packaged with the native Uber application, so that when users update their app, they have the extension installed on their phone as well.

The way the app extension interacts with Siri is through the concept of intents as we described above. Siri will use natural language processing to capture the intent that a user is trying to make, and pass that intent to the extension. For instance if a user says “Order me an Uber” to Siri, Siri will confirm the parameters are acceptable, then pass the ‘request ride’ intent to the app extension and wait for a response. The app extension will then process the intent, and return the response of what is going to happen back to Siri. Siri would then say something like “Ok, I can order you an Uber that will be here in four minutes, should I place the order?”

Once you confirm the order, Siri then triggers a handle method in the extension that performs the task. Uber would implement the code to place the Uber request in the handle method of the app extension. All of this happens without a user having to open the Uber app and with no communication between the containing Uber app and the app extension.

This example from Apple’s Developer Documentation is a great illustration of the ridesharing example I just gave:

The orange boxes represent the intent and response data being passed to and from the extension represented in the blue boxes.

Visual User Interface

At some points in the Siri interaction, users will see visual interface elements.

For instance, while a request is being handled by your application extension, Siri will show a standard waiting UI. In this situation, the standard UI cannot be changed. Your app has a few seconds to handle the intent and send a response to Siri.

In addition to the Intents Framework, Apple has also provided an implementation called the Framework that enables you to configure a custom user interface to show to the user.

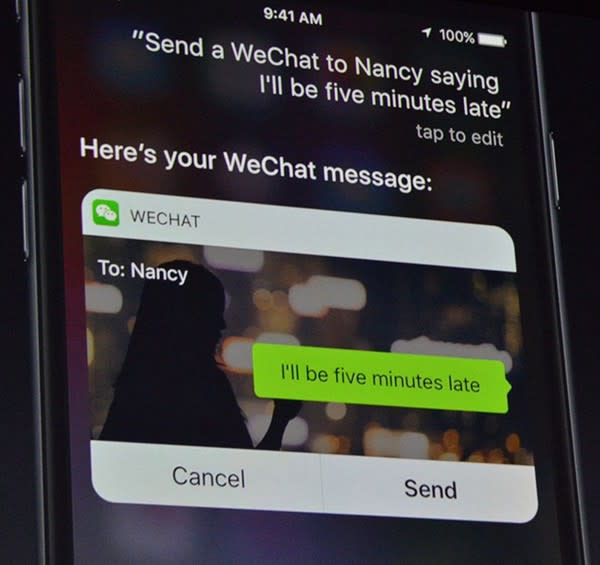

The example given at the keynote with WeChat shows the WeChat extension providing a custom UI back to Siri to show to the user.

This view is created with the siriSnippet context, and can be customized to an extent.

Out of the box, Siri already has a default view for all intents. You can, however, replace the default Siri view with your own custom view if you wish. You can add anything you want inside the view. You can use these views to show additional information, update the status of a long running response, etc. The view will always show up alongside other Siri information in a standard way. The screen will always display the app icon and the app name in a sash at the top, and, at times, on buttons across the bottom.

It is very important to keep in mind that this view is entirely non-interactive. Meaning you can use great animations or imagery, but not controls or tap-pable areas, because users cannot interact with them. Users may only interact with the standard Siri buttons that get placed below the view when necessary.

In the example above, the user asked Siri to send a message using WeChat. Siri then passed the send message intent to the WeChat extension. The WeChat extension processed this request and responded with this UI snippet to the user for confirmation.

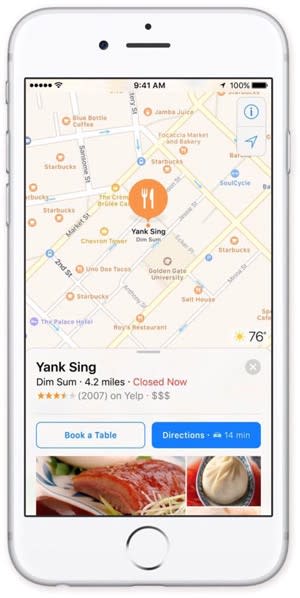

In addition to SiriKit extensions, when you create an extension using the Intents Framework, users are automatically able to interact with this extension in the Maps app.

For displaying custom UI’s in the Maps app, Apple has provided the context which will display a custom Maps card.

Here is an example from the Apple website showing the OpenTable extension for booking a reservation at a restaurant:

An important thing to note is that you can only provide one UI view for each extension. That view will can be configured differently depending on whether the user comes from the Maps app or Siri, but cannot constitute multiple screens, or an entire flow.

There is one domain that is not available on both Siri and Maps, and that is ‘restaurant reservations’. Restaurant reservations are both handled by the Intents Framework, but can only have users interact with them in Maps, not in Siri. My guess is this will be added to Siri in the near future.

Designing a Great User Experience

Introduction to conversational interface design

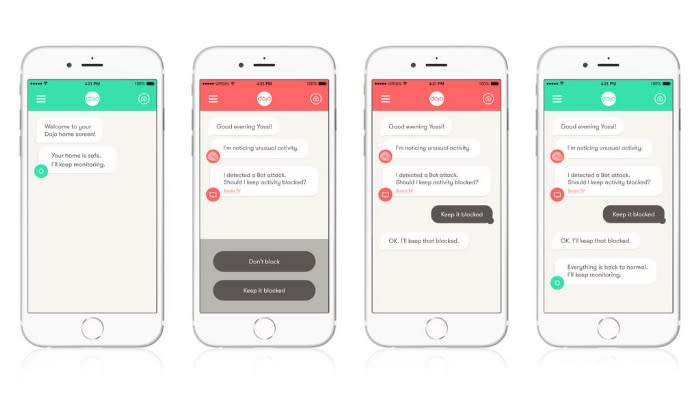

When designing for Siri, it is important to shift your design thinking, because the user interface in Siri is not the same as a typical visual interface that appears on a screen. Visual interfaces have well understood behaviors and patterns, and everyone is pretty familiar with using them. Visual interfaces are part of an application. That application gives the context of what the user is trying to do, the options that they have available, and the details of those options.

As you start to work with conversations though, things change. It can feel strange not having information on a screen. A user can say whatever they want, or they may not know what to say. Siri is a conversational interface. The context of what is happening is established through the conversation between the user, Siri, and your apps.

Without a visual interface to refer to, users have to keep all the information about the context in their heads. Siri attempts to ease this by not overwhelming a user with too many details, and only providing specific and relevant information to the user. The Siri conversational interface is shaped by a set of questions and responses with the user, which your application has the opportunity to shape.

Let’s take the example of a user sending a message through Siri, and Siri responding with the confirmation message “Ready to send it?” There is an whole array of different responses that a user can give. Some users will say “Send it”, others will say “No”, and some may even flat out ignore it. All of which are valid responses, and all of which Siri can handle.

When designing conversational interfaces, it is also vital to capture as much context about the user as possible, and respond with appropriate information based on that context. For example, if I was to hold down the home button and ask Siri “Do I have any reminders?”, Siri would respond with “You currently have eight reminders.”, and Siri would show me a list of these reminders that I can interact with.

This response and list shows up because Siri knows that my phone is most likely in my hand. This example changes, however, if I am using hands-free mode. If I ask Siri “Do I have any reminders?” in hands-free mode, Siri would respond with “I found eight reminders, the first one is… the second one is… should I read out the rest?”

Notice here that Siri responds in a more conversational context because she knows you are probably doing something where your phone is not easily accessible with your hands. Siri also prompts a question to keep the conversation going if necessary, but the user stays in control. Siri can adapt to this hands-free mode if you have CarPlay on, if you have headphones in, if you have the voice-over accessibility setting on, and other contexts.

Vocabulary design with Siri

Many apps have unique ways of describing things. Siri needs your assistance to understand words and phrases that are unique to your application. Some of the phrases that Siri needs help with are part of your app, meaning every user knows that word or phrase. While other phrases that Siri needs help with are user-specific.

App-specific vocabulary is known to every user of your application. Some common examples would be workout names or vehicle types. SiriKit enables you to provide examples of how users will interact with these phrases. These examples teach Siri how users will interact with your application. SiriKit asks that you provide it with the primary way you think users will interact with your application through Siri. This is your ‘hero message’.

For example, you could provide SiriKit with the phrase “Start a workout with Sworkit” as your hero message if you are building a workout app. In addition to the hero message, there are certain parameters for which you can provide your own vocabulary types. In the workout example, we can provide our own unique workout names for specific workouts so that Siri can recognize which workout it needs to start. For these specific workouts, you can also provide Siri with specific examples of how users can interact with your app. For instance, in the fitness app we have been describing, one example might be “Do a strength workout with Sworkit”.

The other type of vocabulary that Siri needs to understand is user-specific vocabulary. User-specific vocabulary is terminology that is specific to an individual user. A great example of this is a contact list in a VoIP calling application. Each user’s contact list will be unique to that specific user. When dealing with user specific vocabulary, SiriKit also gives a unique opportunity that many people will gloss over, but when implemented properly will enable you to provide an optimized user experience. It starts with providing Siri a properly organized, ‘ordered set’ of content.

Let’s take the VoIP calling application for example. When we provide Siri with contacts for our VoIP calling application, we have the opportunity to provide a set of contacts that Siri will analyze from top to bottom, with the first ones being the most important priority. In our example, we could provide Siri with a generic list of all our contacts, perhaps in alphabetical order. We could, however, do one better and provide our recent contacts first, then the rest of our contacts after. We could even step it up further and provide our favorite contacts first, followed by our recent contacts, and then the rest of the contacts after. When Siri crawls the contacts for whom to call, she will give priority to the top of the list. This can greatly improve user experience. For instance, if multiple people in a contact list have the first name “Derek” and a user says “Call Derek using our VoIP App”, if the list is prioritized properly, and Siri will call the right Derek. Otherwise it may dial the wrong one and never know it was wrong.

Conversational interface design guidelines

In designing for conversational interfaces, there are a few steps you should pay particular attention to.

Image source New Deal Design

Training users on how to use your app with Siri

If a user asks Siri “What can you do?”, Siri will show a lists of commands that she can respond to. This includes app specific information, which is where you can teach users how to interact with Siri and your application.

Apple allows you to pass examples to Siri in order to train users on how they can use your app with Siri. When designing for this training experience, here are some guidelines you should follow:

If your app falls into one of the six domains available for use in the Intents Framework, now is the time to start implementing and testing your Siri and Maps extensions- just in time for the iOS 10 release.